From headlines to job descriptions, DevOps has emerged as an outsized buzzword over the past decade—and for good reason. Organizations that successfully adopt DevOps often see big gains in software development speeds, improved reliability, faster product iterations, and have an easier time scaling their services.

From headlines to job descriptions, DevOps has emerged as an outsized buzzword over the past decade-and for good reason. Organizations that successfully adopt DevOps often see big gains in software development speeds, improved reliability, faster product iterations, and have an easier time scaling their services.

But despite its roots in software development, DevOps is a holistic business practice that combines people, processes, cultural practices, and technologies to bring previously siloed teams together to deliver speed, value, and quality across the software development lifecycle.

That means there’s often no one-size-fits-all approach. But there are a common set of practices and principles in any successful DevOps implementation.

In the following guide, we’ll explore those practices and principles and answer the following questions:

The main goal of DevOps is to deliver value to produce better software faster that people can use immediately without any problems—and do so in a way that links the underlying infrastructure, the software architecture, and engineering code releases with minimal friction.

In the software industry, there has been a belief that organizations can either move fast or build reliable products. DevOps makes it possible to do both and accomplishes this in two big ways: By bringing the traditionally siloed development and operations teams together and building automation into every stage of the software development lifecycle (SDLC).

Initially coined by Patrick Debois in 2009, the term DevOps is a combination of “development” and “operations,” which explained the initial idea behind it: to bring development and operations teams into a closer working relationship.

Historically these two teams worked in silos. Developers coded and built the software, while operations ran quality assurance tests and worked on underlying infrastructure to ensure successful code deployments. This frequently led to difficulties deploying code as development and operations teams would come into conflict.

Where Debois and other early DevOps leaders such as Gene Kim and John Willis often broadly characterized DevOps as the integration of development and operations to streamline the software development and release processes, DevOps today has evolved into a holistic business practice that goes beyond individual teams.

At GitHub, we approach DevOps as a philosophy and set of practices that bring development, IT operations, and security teams together to build, test, iterate, and provide regular feedback throughout the SDLC.

Implementing DevOps will look different in every organization. But every successful DevOps environment abides by the following core principles:

To succeed, DevOps requires a close-knit working relationship between operations and development—two teams that historically were siloed from one another. By having all three teams collaborate closely under a DevOps model, you seek to encourage communication and partnership between all three teams to improve your ability to develop, test, operate, deploy, monitor, and iterate upon your application and software stack.

Version control is an integral part of DevOps—and most software development these days, too. A version control system is designed to automatically record file changes and preserve records of previous file versions.

Automation in DevOps commonly means leveraging technology and scripts to create feedback loops between those responsible for maintaining and scaling the underlying infrastructure and those responsible for building the core software. From helping to scale environments to creating software builds and orchestrating tests, automation in DevOps can take on a variety of different forms.

The mindset we carry within our team is that we always want to automate ourselves into a better job.

Andrew Mulholland, Director of Engineering at Buzzfeed

Incremental releases are a mainstay in successful DevOps practices and are defined by rapidly shipping small changes and updates based on the previous functionality. Instead of updating a whole application across the board, incremental releases mean development teams can quickly integrate smaller changes into the main branch, test them for quality and security, and then ship them to end users.

Orchestration refers to a set of automated tasks that are built into a single workflow to solve a group of functions such as managing containers, launching a new web server, changing a database entry, and integrating a web application. More simply, orchestration helps configure, manage, and coordinate the infrastructure requirements an application needs to run effectively.

In any conversation about DevOps, you’re apt to hear the term pipeline thrown around fairly regularly. In the simplest terms, a DevOps pipeline is a process that leverages automation and a number of tools to enable developers to quickly ship their code to a testing environment. The operations and development teams will then test that code to detect any security issues, bugs, or issues before deploying it to production.

Continuous integration, or CI for short, is a practice where a team of developers frequently commit code to a shared central repository via an automated build and test process. By committing small code changes continuously to the main codebase, developers can detect bugs and errors faster. This practice also makes it easier to merge changes from different members of a software development team.

Continuous delivery is the practice of using automation to release software updates to end users. Continuous delivery always follows CI so that software can be automatically built and tested before it is released for general use. Taken together, CI and continuous delivery comprise two-thirds of a typical DevOps pipeline. Critically, a continuous delivery model automates everything up to the deployment stage. At this point, human intervention is required to ship software to end users.

Continuous deployment, or CD, is the final piece of a complete DevOps pipeline and automates the deployment of code releases. That means if code passes all automated tests throughout the production pipeline, it’s immediately released to end users. CD critically removes the need for human intervention to orchestrate a software release, which results in faster release timelines. This also gives developers more immediate real-world feedback.

Continuous monitoring is a set of automated processes and tooling used to troubleshoot issues and development teams can use to inform future development cycles, fix bugs, and patch issues.

A well established continuous monitoring system will typically contain four components:

Feedback sharing—or feedback loops—is a common DevOps term first defined in the seminal book The Phoenix Project by Gene Kim. Kim explains it this way: “The goal of almost any process improvement initiative is to shorten and amplify feedback loops so necessary corrections can be continually made.” In simple terms, a feedback loop is a process for monitoring application and infrastructure performance for potential issues or bugs and tracking end-user activity within the application itself.

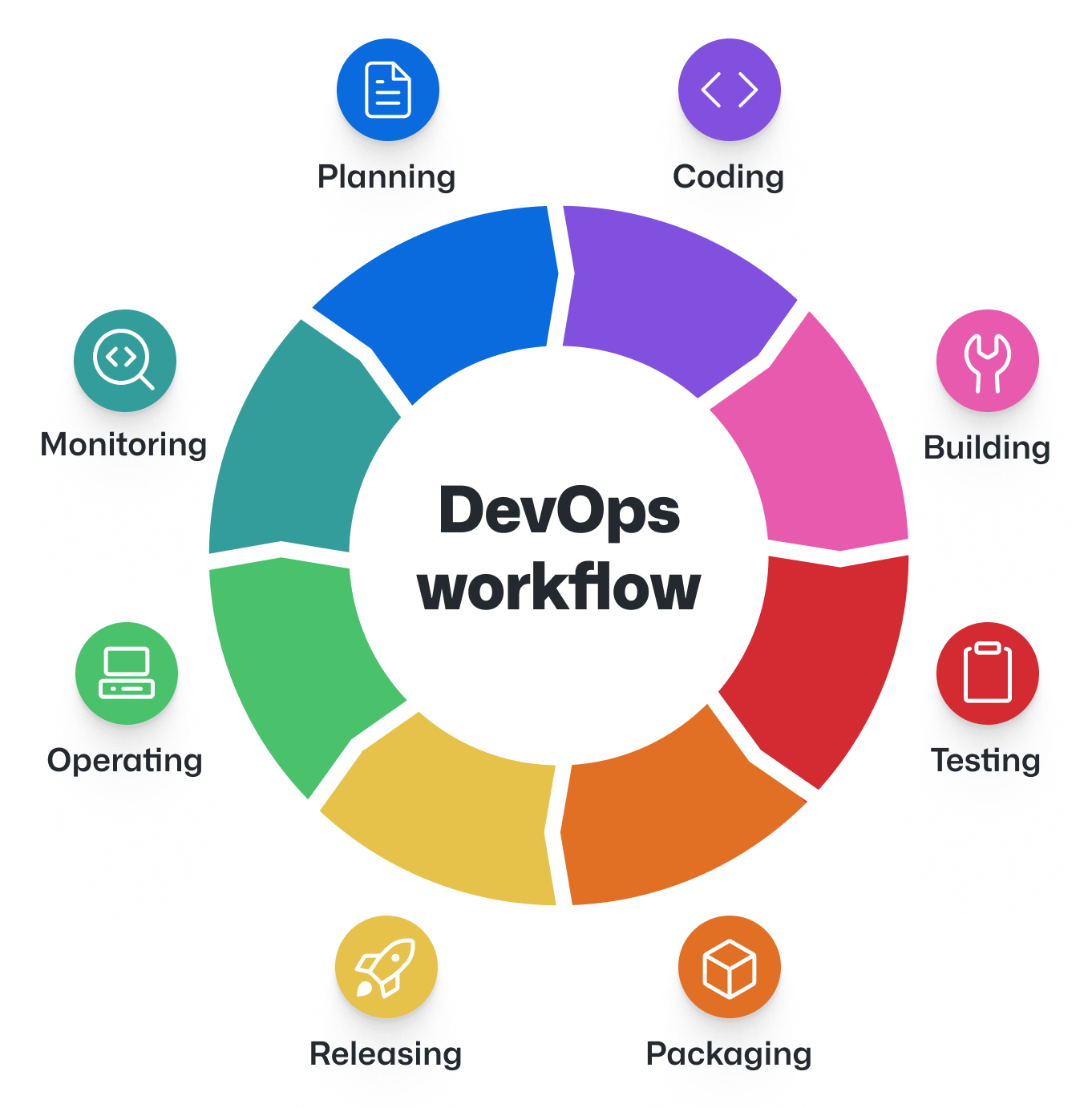

The DevOps lifecycle is rooted in finding collaborative ways to plan ahead, define application and infrastructure requirements, identify areas in the SDLC that can be automated, and make sure everyone is on the same page. This all comes back to the goal of DevOps: to deliver value and produce quality software that reaches end users as quickly as possible.

Typically a product manager and an engineering lead will work in tandem to plan roadmaps and sprints. Teams will leverage project management tools such as kanban boards to build out development schedules.

Learn how to use GitHub for project planning with GitHub Issues >>

After planning is complete, developers will begin building code and engage in subsequent tasks such as code review, code merges, and code management in a shared repository.

As code is developed and reviewed, engineers will engage in merging—or continuously integrating—that code with their source code in a shared and centralized code repository. This is typically done with continuous integration to test code changes and version control tools to track new code commits.

During the build process, continuous testing helps ensure new code additions don’t introduce bugs, performance dips, or security flaws to the source code. This is accomplished through a series of automated tests that are applied to each new code commit.

Before launching a new iteration of an application, a team will package its code in a structure that can be deployed. This can sometimes include sharing reusable components for sharing via package ecosystems such as npm, Maven, or Nuget. This can also involve packaging code into artifacts, which are created throughout the software development lifecycle, and deploying them to a staging environment to do final checks and store any artifacts.

The team will release a new iterative version of an application to end users. This typically includes release automation tooling or scripts and change management tools which can be used in the event a change doesn’t work in deployment and needs to be rolled back.

Throughout all stages of the SDLC, DevOps practitioners ensure the core infrastructure the application needs to run on works—this includes setting up testing environments, staging or pre-deployment environments, and deployment environments.

Once an application is being used by end users, there is also a need to ensure that the underlying infrastructure such as containers and cloud-based servers can scale to meet demand.

Practitioners will typically leverage infrastructure-as-code tools to make sure the underlying systems meet real-time demand as it scales up and down. They will also engage in ongoing security checks, data backups, and database management, among other things.

DevOps practitioners implement a mixture of tooling and automation to engage in continuous monitoring across the software development lifecycle—especially after that software is shipped to end users. This includes service performance monitoring, security monitoring, end user experience monitoring, and incident management.

Find out how GitHub gives organizations advanced monitoring capabilities >>

One of the essential parts of a successful DevOps workflow is making sure it’s “continuous,” or always on. This means setting up a process to ensure the workflow takes on a continuous recurring frequency—or, more simply, making sure you’re putting your DevOps workflow into practice.

The organizations that are most successful at DevOps don’t focus on building “DevOps teams,” but instead focus on practicing DevOps. In doing so, those organizations prioritize building DevOps environments that are collaborative with an all-in approach that extends across teams and focuses on an end-to-end product instead of siloed, incremental projects.

Everyone from operations and IT to engineering, product management, user experience, and design plays a role in a successful DevOps environment. The best DevOps practices focus on what role each person serves in the larger organizational mission instead of dividing out teams based on individual responsibilities.

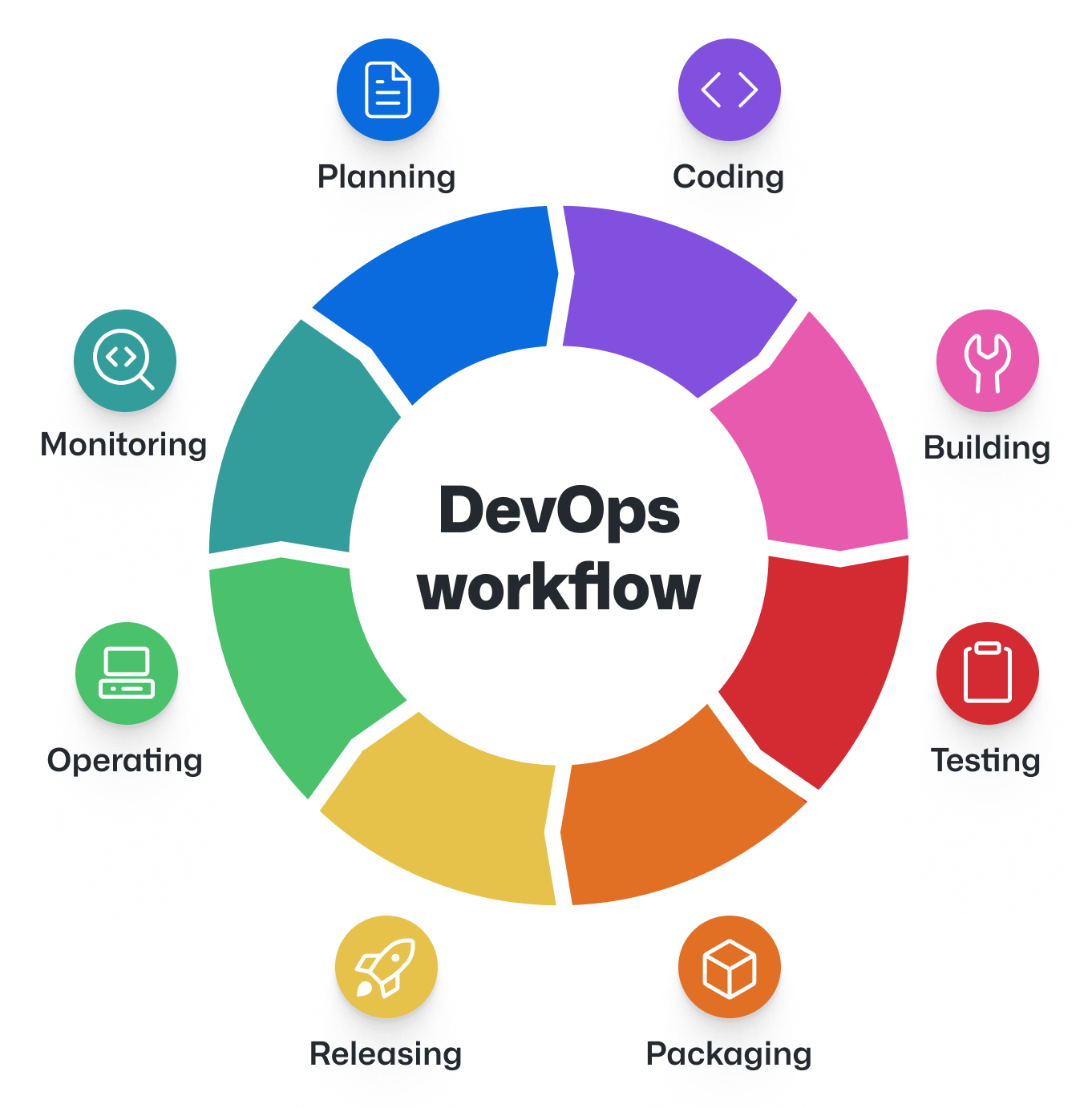

DevOps roles generally fall into four key areas:

DevOps is an organizational transformation rooted in breaking down silos—and that requires everyone's buy-in. While there are specific roles within any successful DevOps practice, the most successful companies avoid building a "DevOps team" that sits apart from the rest of their organization in a new silo.

Security is important in the entire DevOps lifecycle—in terms of the code developers write, the core infrastructure operations team build, orchestrate, scale, and monitor, the automated security tests, and more. DevOps practitioners often leverage tools or create a number of scripts and workflow automations to continuously test their applications and infrastructure for security vulnerabilities. This practice is commonly called DevSecOps and is a derivative function of DevOps where security is prioritized as strongly as development and operations.

TL;DR: Standard DevOps best practices include cultural changes to break down silos between teams and technology investments in automation, continuous integration, continuous deployment, continuous monitoring, and continuous feedback.

According to Microsoft’s Enterprise DevOps Report, organizations that successfully transition to a DevOps model ship code faster and outperform other companies by 4-5x. The number of organizations that meet this bar is climbing fast, too. Between 2018 and 2019, there was a 185% increase in the number of elite DevOps organizations, according to the 2019 State of DevOps report from DORA. But becoming an elite DevOps organization requires a seismic cultural shift and the right tools and technologies. How can organizations find success when adapting to and adopting a DevOps practice?

At GitHub, we have identified eight common best practices:

This stands in contrast to monolithic application architecture where the underlying infrastructure is organized in a single service—ie, if demand on one part of the application spiked, the entire infrastructure needed to scale to meet that demand. Monolithic architectures are also more difficult to iterate upon because when one part of the application is updated, it requires a full redeployment of the full codebase.

Here’s a fun fact: In the early 2010s, DevOps was called the second decade of agile development with many seeing it as a natural successor to agile methodology.

Despite this, DevOps and agile development aren’t the same thing—but DevOps does build upon and leverage agile methodology, which often leads people to conflate the two practices.

At its simplest, agile development methodology seeks to break large software development projects into smaller chunks of work that teams can quickly build, test, get feedback on, and create the next iteration.

By contrast, DevOps methodology fundamentally seeks to bring large, historically siloed teams (developers and operations) together to enable faster software development and release cadences.

But the biggest difference is that DevOps is a whole-business strategy focused on building end-to-end software solutions fast. Agile is by contrast often focused purely on functional software releases.

GitHub is an integrated platform that takes companies from idea to planning to production, combining a focused developer experience with powerful, fully managed development, automation, and test infrastructure.

GitHub helps the company’s long-standing efforts to accelerate development by breaking down communication barriers, shortening feedback loops, and automating tasks wherever possible.

Mike Artis, Director of System Engineering at ViacomCBS